BklynMuse: failing fast, retooling faster = version 2

This year, I had the privilege of speaking at Webstock and one of the things I learned from listening to the other speakers was the benefit of fast, iterative cycles. It was a long plane ride home from New Zealand, but by the end of it, I was determined that we revisit BklynMuse with the idea to fix substantial issues as quickly as possible, get it back into the hands of our visitors—watch and learn from what we see happen—rinse and repeat. This post is going to detail what we saw failing and the changes we’ve made to the guide. Fair warning, this is going to be epic, so jump with me if this interests you.

Let’s recap.

BklynMuse is a gallery guide for web-enabled smart phones (iphone, droid, blackberry). At its heart, it’s a recommendation system powered by people—as you use it and actively “like” objects in our galleries, the guide will show you recommendations based on those preferences. If you are scratching your head at this point, go back and re-read the primer from the August ’09 launch.

Location, location, location.

This is, by far, the biggest fail and still remains the biggest challenge ahead of us. In our version 1 beta testing, 10/10 testers expected the device to automatically locate them within the gallery and were frustrated when it didn’t. The reality is, that technology is just not there yet once you get inside the building. Geolocation works really well in the wild, but it fails in galleries where cell signal is spotty and object location mapping is complicated. In addition, our use of cell-phone repeaters to extend signal within our walls does nothing but make an even bigger mess of the issue.

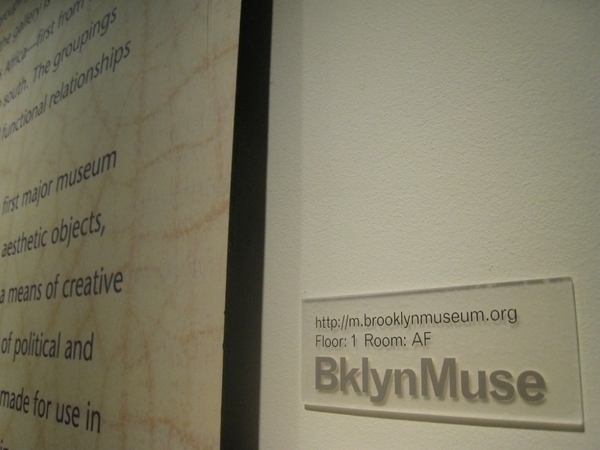

Signage #fail. Signage was too small and got lost in a sea of sameness trying to blend in.

In version 1, we assigned room codes to each area of the museum, but ran into major problems when the signs were too small and blended in too much to the surroundings. With so many signs going into the building, the general concern was that it would be overwhelming for people who wouldn’t want to use the system, so the choice was made to try and make this signage blend in. Of course, that meant it was difficult for those using the system to find the signs, so we had a Catch-22 going on.

In version 1, above, visitors had trouble with drop down menus as a way to set location.

When folks did find the signs, they found the drop down menus difficult to use. We thought about using QR codes or similar, but as an institution with a community-minded mission, we want the guide to remain as accessible as possible and that means striving to ensure it works across many devices. What we give up in a scenario like this is the ability to use built-in hardware easily. Not to mention, putting QR codes on the 5,764 objects on view would be just a tad distracting. We thought about maps, but maps present a problem on a small screen (doable, but tough) and, most importantly, for every person who understands a floor plan there’s another who can’t stand them. Maps might solve the problem for some people, but they could easily create frustration for another set of users.

In version 2, above, we focus on accession number searching. If people have trouble, we’ve got an example to explain what to look for on a label.

In the end, we simplified in version 2. Room codes are gone and we’ve re-engineered the home page to focus on accession number searching and we’ve added some education about what we mean by accession number (because, that’s obvious to everyone…right?).

Congrats, you found the Bird Lady! Now, view the objects in this room.

When you find the object you are looking for, that automatically sets your location, so you can browse other things in the room. This seems like a surprisingly simple solution to a complex problem, but perhaps that’s too easy? I guess we are about to find out.

Digging for gold.

Overwhelmingly, beta testers told us two things. They loved finding the “notes,” off-the-cuff remarks from staffers and visitors about the objects, but as much as they loved the notes, they were too difficult to find. In version 1, you had to click on an object to see if there was content there that might interest you. In version 2, we’ve re-engineered the recommended tab to indicate what kind of content is behind the click.

(left) Version 1: Difficult to see what kind of content is available. (right) Version 2: Adjusted this view to include what comes after the click.

Too much.

You may be spotting a trend right about now: simplification. Version 1 of this guide wanted to be everything to all users all of the time and it was a mess! That’s my fault for growing the spec and getting too excited in the first phase, so now we are cleaning up after a few hand slaps later. Moods are gone. Sets have been simplified and combined into comments. Settings have been paired down to a minimum. We took a weed whacker to the guide in the name of less is more. While we are not at a Miesian level yet, we’ve come far in version 2 and may go one more round in our next iteration as we see how visitors use the device.

Difficult to let go of this, but it proved to be just too much and we needed to simplify. Moods, seen in version 1 above, are now gone.

Have a say.

Beta testers also told us that the “notes” inspired them to want to comment too, right there in the gallery without having to go back to the website, so we are now allowing comments on each of the object pages. This could be a total disaster—keyboards on small devices are tough and that may cause a lot of frustration. We are worried about the usual issues with moderation and possible jump in inquires, but it’s worth a shot. The electronic comment books teach us a lot about our exhibitions and installations—enabling something similar on an object level may yield interesting results. Either that or it will be the first thing we take away in version 3!

Let’s talk style.

We’ve restyled much of the UX, so that it has more iphone-like elements. Version 1 was styled much more like our website and that just didn’t work. Overall, elements have a much more clickable feel to them…including the “like” button, which is something we really want to see people using. (Tip of the hat to Seb Chan, for stating the obvious about this over dinner one evening in NZ—thanks, Seb!)

We have no idea if any of these changes will help, but the aim was to make a fast set of adjustments, get it into the hands of visitors and then make more changes based on what we see happen.

If you made it this far, stay tuned. I’ll be back tomorrow with more news. Curious? Here’s a hint.

Shelley Bernstein is the former Vice Director of Digital Engagement & Technology at the Brooklyn Museum where she spearheaded digital projects with public participation at their center. In the most recent example—ASK Brooklyn Museum—visitors ask questions using their mobile devices and experts answer in real time. She organized three award-winning projects—Click! A Crowd-Curated Exhibition, Split Second: Indian Paintings, GO: a community-curated open studio project—which enabled the public to participate in the exhibition process.

Shelley was named one of the 40 Under 40 in Crain's New York Business and her work on the Museum's digital strategy has been featured in the New York Times.

In 2016, Shelley joined the staff at the Barnes Foundation as the Deputy Director of Digital Initiatives and Chief Experience Officer.