What encourages people to ASK about certain objects?

While I wanted to learn more about visitors complete interactions through the app, without the ability to systematically dive into chats, I chose to focus on another aspect of user behavior: in-gallery ASK labels. The first iteration of ASK labels were question prompts, which was later switched to generic text about the app (e.g. “ask us for more info” or “ask us about what you see”), and are now back to questions (and a few hopefully provocative statements). The switch back to questions was due to anecdotal evidence that the generic prompts weren’t motivating people to use the app. To check this assumption, I looked at user behavior based on frequency of questions asked (determined via snippet counts) and if that changes for objects with or without ASK labels. Spoiler alert: it does.

To start, I needed to complete an audit of which objects currently have or have ever had ASK-related labels. This was more challenging than it seems, because over the years the record of which labels actually made it into the galleries was not always accurately kept up to date. I was able to look at what objects currently have ASK labels by easily walking through the museum and making note. For objects that are no longer up, there were two installments of labels that had been tagged in the dashboard (a first iteration of questions and a later iteration of generic labels).

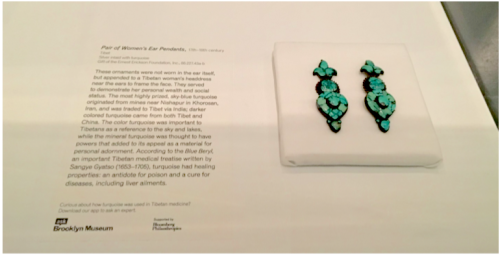

The prompt for this ASK label reads: Curious about how turquoise was used in Tibetan medicine? Download our app to ask an expert.

The audit of existing labels was able to be a bit more elaborate. I was able to look at the text of the label and snippet counts as well as compare the label question with the questions that visitors actually asked. I would have also liked to look at the location of the labels (wall/case/pedestal etc.) to see how that influences behavior, but time constraints prevented me from pursuing this avenue of inquiry.

Through this comparison, I was able to gain a little insight into how the labels affect user behavior. Based on snippet counts, objects with ASK labels have on average 16.19 snippets while objects with no labels have, on average, 8.28 snippets. That means objects that have current ASK labels have a 2x higher engagement rate than objects without.

To take it a step further, I broke down snippet counts by objects that have ever had a label (according to the dashboard categories) and the type of label. Objects that have or had generic labels have an average of 14.15 snippets. Objects that have had or had question labels have an average of 19.90 snippets. Objects without any type of ASK label have an average of 5.96 snippets. This suggests that the question prompts are the most effective method to get users to engage with objects and the app.

I will add that there was one object that was not considered in these counts. The Dinner Party has over 400 snippets and is a project of analysis within itself. It is asked about so much more frequently than the other objects that it was skewing the averages and therefore temporarily removed it from the dataset.

Since I had every object asked about (over 2,000 different artworks) and their snippet counts at my disposal, I also wanted to get back to some of the less frequently asked about objects. I put together a spreadsheet of all the objects organized by their total snippets. From this I learned that objects with snippet counts above 50 snippets only make up 2% of all the asked about objects. Objects with snippet counts 20 and above make up 6% of total objects. The majority of objects have fewer than 20 questions asked about them.

What was it about the 2% of objects that made them so appealing and what makes visitors want to ask about less “popular” objects? Other than the ASK labels, I wanted to see if there was anything glaringly obvious about the popular or less popular objects. I looked at a selection of the 50 or higher snippet count objects individually and recorded various qualities about them. I did the same with a selection of objects with 2 snippets associated with them. Here’s what I found….

What makes people ask about objects?

- Is larger in size

- Has religious connotations

- On view for extended length

- Made by an extremely well-known artist (i.e. Rodin) or depicts a recognizable figure (i.e. Jesus)

- Is a mummy

Why might people ask fewer questions about objects?

- Smaller in size

- Are functional objects

There is still much to be answered about the second question. However, since 98% of objects have fewer than 50 snippets, there just isn’t enough time to look at each object’s qualities and questions to determine more. Plus, I wanted to dedicate some remaining time to the full visitor journey with the app, but I was still dealing with no way to search through chats.

Stay tuned for my final blog post as I detail how this was resolved and what more I learned.

Sydney Stewart is the 2018/19 Pratt Visitor Experience & Engagement Fellow at the Brooklyn Museum. She is currently pursuing her M.S. in Museums & Digital Culture from Pratt’s School of Information and has previous experience in collections management, exhibitions, visitor research and digital media. Her primary interests are in creating and evaluating the ways visitors can digitally interact with museum collections. Sydney’s current research focus is analyzing user data from ASK Brooklyn Museum.

Start the conversation