Initial Insights from ASK Data

During my first semester as the Pratt Visitor Experience & Engagement fellow I was able to learn a significant amount about ASK user behavior—despite limitations of data sets—and answer some of the following questions.

What does pre-download visitor behavior look like?

The notes from ASK ambassadors provided critical insight into behavior around the app, especially before downloading. Two main trends are especially worth noting:

Download locations occurs most often and most easily at start of visit. Ambassadors most often had success approaching people and creating desire to use the app earlier on in visit. Favorite spots include near the directory in the lobby (just past the admissions point) and first floor exhibitions and elevators.

Ambassadors help lower a barrier to entry. ASK Ambassadors frequently encountered visitors who struggled with how to use the app due to various technology issues (i,e. Location services, or bluetooth not being on). There were instances of individuals having the app downloaded, but were unable to get it to work due to some setting or password issue on their phone. An ongoing challenge with ASK is that visitors aren’t always sure what questions to ask (especially if there were no labels with suggestions), so ambassadors helped provide a starting point.

Ambassadors were crucial to solving these issues of uncertainty and highlight the importance of having staff on the floor who are well trained in whatever technology the museum is trying to promote.

Who are the users and non-users?

Based on ASK Ambassador notes, I was able to paint a small picture of a few user/non-user personas. In the chart below, personalities highlighted in red indicate those not likely to use the app, those highlighted in yellow indicate visitors who could be convinced to use the app, and green indicate those who are very enthusiastic about using the app.

The data from Sara’s Pratt class, who is currently conducting user surveys via the ASK app, will be able to refine this in the near future (more in a future post from Sara).

Where are people asking questions?

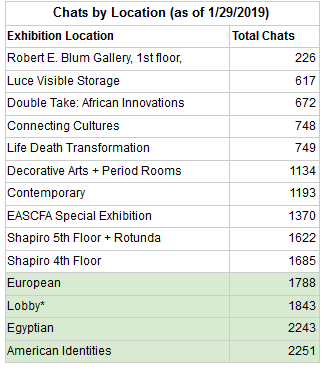

As mentioned in my previous post, locations proved a huge challenge to identify. To start, I had to manually create this table by filtering the total chats metric by location and entering it in.

As you can see, the top gallery locations are American Identities (now American Art), Egyptian, the Lobby, and European. On top of the fact that locations tracked by the dashboard do not account for visitors who use the text option, there are other glaring issues with taking this data at face value. The first is that from going through chats and the kinds of questions asked it became clear that areas without descriptive labels often were areas where visitors asked a lot of questions. Additionally, the overwhelmingly most asked-about artwork is The Dinner Party, which is located in the Elizabeth A. Sackler Center for Feminist Art (EASCFA). Despite this, EASCFA is not one of the top gallery locations because The Dinner Party is tracked separately and is not included in the EASCFA data. Nuances like these can provide a more complete picture about app use and visitor interest, but are not reflected in this data. If the question is where is the app being used in the museum, this data can provide a general overview. However, if the questions is more about what are people asking about, this data does not offer any valuable insights.

As you can see, the top gallery locations are American Identities (now American Art), Egyptian, the Lobby, and European. On top of the fact that locations tracked by the dashboard do not account for visitors who use the text option, there are other glaring issues with taking this data at face value. The first is that from going through chats and the kinds of questions asked it became clear that areas without descriptive labels often were areas where visitors asked a lot of questions. Additionally, the overwhelmingly most asked-about artwork is The Dinner Party, which is located in the Elizabeth A. Sackler Center for Feminist Art (EASCFA). Despite this, EASCFA is not one of the top gallery locations because The Dinner Party is tracked separately and is not included in the EASCFA data. Nuances like these can provide a more complete picture about app use and visitor interest, but are not reflected in this data. If the question is where is the app being used in the museum, this data can provide a general overview. However, if the questions is more about what are people asking about, this data does not offer any valuable insights.

What are people asking about?

Next I wanted to know what objects people were asking about and the kinds of questions they asked. The dashboard actually made the start of this easy since it offers some of that information. I was able to pull the most popular objects and popular snippets of all time as well as by year to see what kinds of variations there were. The problem with this was that there are a handful of objects that are so popular (such as The Dinner Party, or the Assyrian Reliefs) that it thwarted my ability to learn anything new about user behavior. Through anecdotal information (having to answer the same questions about the same objects over four years) and the dashboard metrics, we already know the most asked about objects and most popular questions. However, over 2,000 objects have been asked about, and the dashboard only pulls the top 100 works. I start to wonder: what about the objects that don’t make that list?

Sydney Stewart is the 2018/19 Pratt Visitor Experience & Engagement Fellow at the Brooklyn Museum. She is currently pursuing her M.S. in Museums & Digital Culture from Pratt’s School of Information and has previous experience in collections management, exhibitions, visitor research and digital media. Her primary interests are in creating and evaluating the ways visitors can digitally interact with museum collections. Sydney’s current research focus is analyzing user data from ASK Brooklyn Museum.

Start the conversation